How to Optimize Your Website for Search Engine Crawlers

Optimize Your Website for Search Engine Crawlers

You might have heard terms like Googlebot. These are the automated programs that search engines use to explore the World Wide Web. Their job is to tirelessly visit websites, read their content, and understand what each page is about. They’re essentially the first visitors to your site from a search engine’s perspective, discovering your digital presence and reporting back.

If these digital explorers can’t easily find, access, or understand your website, it becomes incredibly difficult for your pages to show up when someone types a relevant query into a search bar. That’s why optimizing your website for search engine crawlers is fundamental to your online success and a core part of effective SEO optimization.

It’s all about making your website clear, accessible, and welcoming so that these essential bots can do their job effectively. In this guide, we’ll break down the simple yet powerful strategies you can use to make friends with search engine crawlers and help them understand just how valuable your website is. Let’s dive in and make sure your digital doors are wide open!

You May Also Like to Read: What is Off-Page SEO? Benefits and Best Practices

Why Crawlers Are Your Website's First Visitors

Before anyone sees your website in search results, a crawler has likely visited it. Their journey involves two main parts:

Crawling: Discovering your web pages and reading their code and content.

Indexing: Processing that information and adding it to the search engine’s massive database (the index), like adding a book to a library catalogue.

If a page isn’t crawled and indexed, it simply cannot appear in search results. That’s why making your site crawler-friendly is the absolute bedrock of being discoverable online.

Simple Ways to Help Search Engine Crawlers

So, how do you make sure your website is a welcoming and easy-to-understand place for these digital explorers? It boils down to a few key areas:

Guiding the Way: Structure and Navigation

Imagine handing someone a map. Is it clear? Does it logically show where everything is? Your website structure and how you link pages together do the same for crawlers.

Logical Site Organization: Just like grouping similar items in a store aisle, organizing your web pages into logical categories and subcategories makes sense to both humans and bots. Using clear directories in your URLs (like /products/shoes/running/) helps crawlers understand the relationships between different parts of your site. It creates a clear hierarchy that’s easy to follow.

Clear and Descriptive URLs: Your web address (URL) is like the signpost for a specific page. Make it count! Use simple, readable URLs with words related to the page’s content. Avoid confusing strings of random characters. A clear URL gives crawlers (and users) an instant clue about what they’ll find on the page.

Smart Internal Linking: The links between pages on your website are like pathways connecting different rooms in your building. Crawlers follow these paths! Use internal linking strategically to connect related content. Crucially, use descriptive anchor text (the clickable words of the link).

Instead of “Click Here,” link with words that describe the destination page, like “learn more about SEO best practices.” This helps crawlers understand the context of the pages you’re linking to.

Having a clear structure and thoughtful internal links gives crawlers an easy-to-follow map of your entire website.

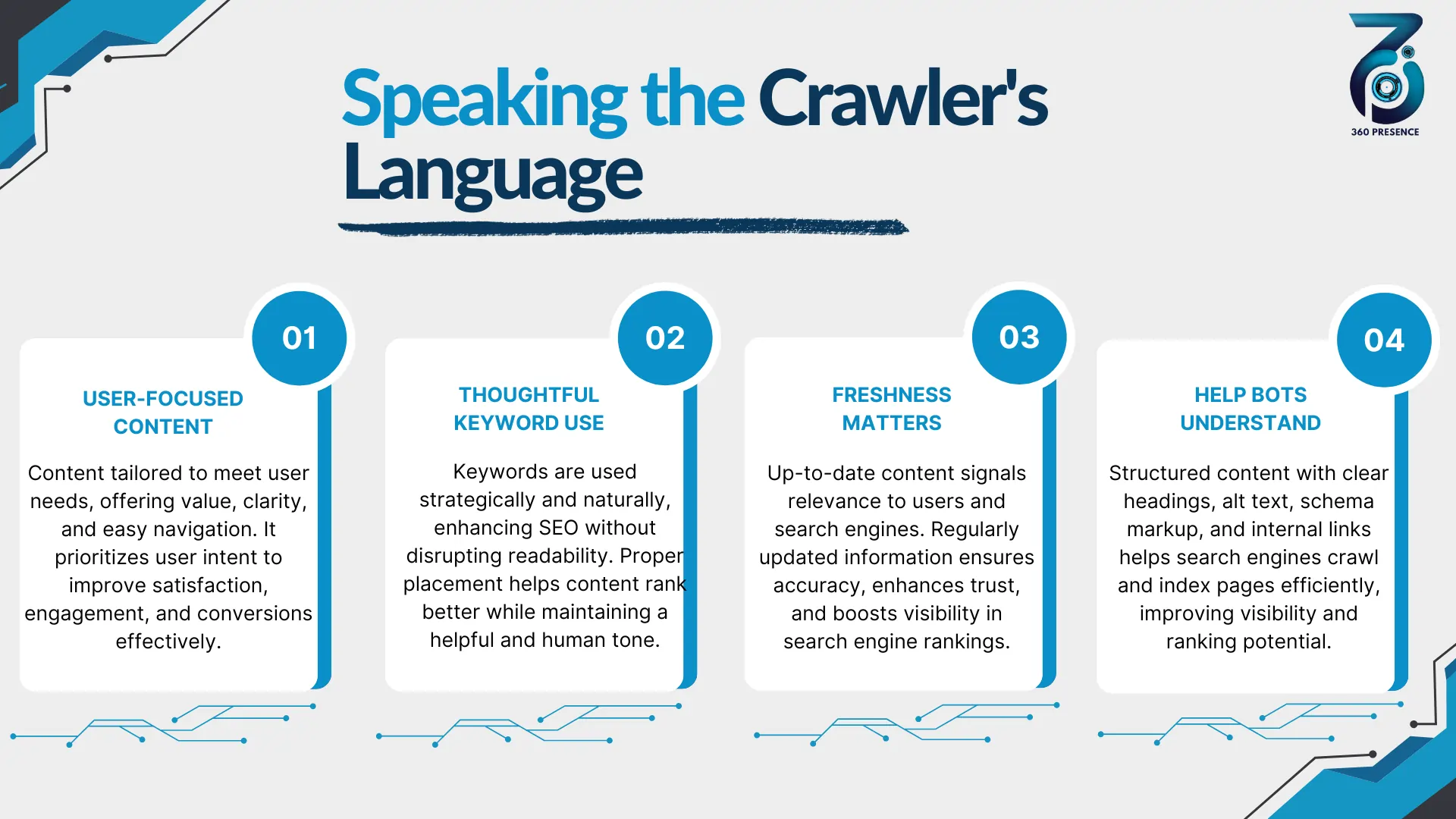

Speaking the Crawlers Language: Content Optimization

Crawlers read code, but they’re also getting smarter at understanding the actual meaning of your content. Helping them understand your message is key.

High-Quality, User-Focused Content: This is the golden rule. Create content that is valuable, informative, engaging, and meets the needs of your human visitors. Search engines prioritize content that people find genuinely helpful. If your content is good for users, it’s generally good for crawlers and rankings.

Thoughtful Keyword Use: Keywords are the bridges between what people search for and the content you provide. Include relevant keywords naturally within your content. Think about the words and phrases your target audience would use. Incorporate these terms in your page title tags, meta descriptions, headings (H1s, H2s), and within the body text itself. But please avoid “keyword stuffing” – unnaturally forcing keywords in – it makes your content hard to read and can be penalized.

Freshness Matters: Regularly updated websites signal to search engines that they are active and relevant. Regularly updating old content or publishing new, high-quality content can encourage crawlers to visit your site more frequently.

Help Bots Understand Meaning with Markup: To give crawlers extra help understanding specific details on your page (like a product’s price, a recipe’s cooking time, or an event’s location), you can use something called structured data markup, like Schema.org. This is like adding special labels to your content that crawlers can easily read and interpret. This gives crawlers, especially increasingly sophisticated AI crawlers, a much clearer understanding of the specific information on your page, which can lead to richer, more appealing listings in search results (rich snippets).

Optimizing your content means ensuring that the stories you tell on your website are clear, relevant, and easy for crawlers to process and categorize.

You May Also Like to Read: Role of Artificial Intelligence in Digital Marketing

How to Make Your Website Easy for Search Engines to Crawl?

Sometimes, hidden technical issues can block crawlers or slow them down. Addressing these is crucial for smooth sailing.

Blazing Fast Page Speed: Crawlers have limited time and resources. A slow website not only frustrates visitors but also limits how many pages crawlers can visit during a single crawl. Improving your page speed makes your site more efficient for both users and bots.

Submitting a Sitemap: An XML sitemap is a direct file on your server that lists all the important pages you want search engines to know about. It’s essentially a VIP list for crawlers. Submitting this to Google Search Console (and other search engine webmaster tools) is a must. It ensures crawlers know about all your key pages, including those that might not be easily found through links.

Managing Access with Robots.txt: You have control over where crawlers go! The robots.txt file on your server allows you to tell crawlers which areas of your site they are allowed or disallowed from visiting. This is useful for blocking low-value pages (like internal search results, login pages, or temporary content) to save your crawl budget (the resources allocated to crawl your site) for the pages that matter most. Just be careful not to accidentally block important pages!

Handling Duplicate Content Gracefully: Sometimes, the same content might appear at different URLs (maybe due to technical setup). This can confuse crawlers and dilute authority. Using canonical tags tells search engines which version of a page is the “master” copy you want them to index and rank. This keeps your index clean and focused.

Addressing these technical aspects ensures crawlers can efficiently access and process your site without hitting frustrating roadblocks.

How to Make Your Website Mobile-Ready for Crawler?

The world is increasingly mobile, and so is Google’s indexing!

A Seamless Mobile Experience: Since Google primarily uses the mobile version of your site for indexing (mobile-first indexing), having a website that looks and works great on mobile devices is non-negotiable. A responsive design that adapts to different screen sizes is the standard. If crawlers struggle to use your mobile site, it will impact their understanding of your content.

Consistent Content Across Devices: Ensure that the main content on your mobile pages is the same as on your desktop pages. Don’t hide important text or features just for the mobile version, as Google might miss it during the mobile-first crawl.

Making your site mobile-friendly means you’re optimizing for the version of your site that Googlebot uses most for understanding and ranking.

The Future is AI: Preparing for Smarter Bots

As AI becomes more integrated into search, helping bots understand not just words but concepts and relationships becomes even more important.

Clean Code for Smart Reading: Presenting your content with clean, well-structured HTML makes it easier for sophisticated AI crawlers to parse and interpret the information accurately. Think of it as providing a clean, easy-to-scan document rather than a messy one.

Consider AI-Specific Instructions (Emerging): While still a developing area, keep an eye out for potential standards like an llms.txt file, which might emerge as a way to provide specific instructions to large language models or AI systems regarding how they interact with your content. For now, focusing on clarity, structure, and semantic markup is the best way to help AI understand.

While AI in search is constantly evolving, focusing on clarity, structure, and meaning in your content will set you up well for the future.F

Keeping Watch: Monitoring and Improvement

Making your site crawler-friendly isn’t a one-and-done task. It requires ongoing attention and care.

Your Best Friend: Google Search Console. This free tool from Google is your direct window into how Googlebot sees your site. Use it constantly! Check the Index Coverage report to see which pages are indexed and if there are errors. Use the URL Inspection tool to test specific pages and see how Google last crawled and rendered them. This tool is invaluable for diagnosing and fixing crawler issues.

Stay Curious, Stay Updated: The world of SEO and search engine algorithms is always changing. Keep learning, follow industry news, and stay informed about the latest best practices for crawling and indexing.

By regularly monitoring and staying informed, you can quickly address any issues and ensure your website remains easily accessible to crawlers.

Conclusion

Optimizing your website for search engine crawlers is foundational to successful SEO. It’s about being a good digital host: making your site easy to find, easy to navigate, and easy to understand for the bots that are responsible for indexing the web. By focusing on a logical structure, high-quality and well-marked-up content, solid technical performance, mobile-friendliness, and keeping an eye on AI-driven changes, you significantly improve your website’s crawlability and indexability.

These are the essential first steps to appearing in search results. By implementing these strategies, you can improve your website’s crawlability, indexing, and ultimately, its ranking in F. So, roll out that digital welcome mat, speak the crawler’s language, and watch your website become more visible to the world!

Partner with Nauman Oman

Recent Posts

Working Together Ideas come to life

No matter how big your company is, as you expand and reach new highs you’ll want an agency to have your back. One with a process

360presence@gmail.com

© 2023 360PRESENCE All rights Reserved